#Data Center Engineering Operations

Explore tagged Tumblr posts

Text

Data Center Engineering Operations , Infraops

Post Name Data Center Engineering Operations , Infraops Date October 5, 2023 Short Info. Amazon is expanding the Data Center management team in India. This position within Amazon Data Services India Private Limited (ADSIPL) requires broad Data Center knowledge with Subject Matter Expertise (SME) in as many specific fields as possible.The location for this job to be discussed, as there may be…

View On WordPress

#10th pass Job#12th Pass Job#Amazon Career#Amazon Job#Data Center Engineering Operations#Data Center Engineering Operations Infraops#Fresher Job#Mumbai Jobs#Private Job#Private Job Vacancy

0 notes

Text

Search Engines:

Search engines are independent computer systems that read or crawl webpages, documents, information sources, and links of all types accessible on the global network of computers on the planet Earth, the internet. Search engines at their most basic level read every word in every document they know of, and record which documents each word is in so that by searching for a words or set of words you can locate the addresses that relate to documents containing those words. More advanced search engines used more advanced algorithms to sort pages or documents returned as search results in order of likely applicability to the terms searched for, in order. More advanced search engines develop into large language models, or machine learning or artificial intelligence. Machine learning or artificial intelligence or large language models (LLMs) can be run in a virtual machine or shell on a computer and allowed to access all or part of accessible data, as needs dictate.

#llm#large language model#search engine#search engines#Google#bing#yahoo#yandex#baidu#dogpile#metacrawler#webcrawler#search engines imbeded in individual pages or operating systems or documents to search those individual things individually#computer science#library science#data science#machine learning#google.com#bing.com#yahoo.com#yandex.com#baidu.com#...#observe the buildings and computers within at the dalles Google data center to passively observe google and its indexed copy of the internet#the dalles oregon next to the river#google has many data centers worldwide so does Microsoft and many others

11 notes

·

View notes

Text

All-Star Moments in Space Communications and Navigation

How do we get information from missions exploring the cosmos back to humans on Earth? Our space communications and navigation networks – the Near Space Network and the Deep Space Network – bring back science and exploration data daily.

Here are a few of our favorite moments from 2024.

1. Hip-Hop to Deep Space

The stars above and on Earth aligned as lyrics from the song “The Rain (Supa Dupa Fly)” by hip-hop artist Missy Elliott were beamed to Venus via NASA’s Deep Space Network. Using a 34-meter (112-foot) wide Deep Space Station 13 (DSS-13) radio dish antenna, located at the network’s Goldstone Deep Space Communications Complex in California, the song was sent at 10:05 a.m. PDT on Friday, July 12 and traveled about 158 million miles from Earth to Venus — the artist’s favorite planet. Coincidentally, the DSS-13 that sent the transmission is also nicknamed Venus!

NASA's PACE mission transmitting data to Earth through NASA's Near Space Network.

2. Lemme Upgrade You

Our Near Space Network, which supports communications for space-based missions within 1.2 million miles of Earth, is constantly enhancing its capabilities to support science and exploration missions. Last year, the network implemented DTN (Delay/Disruption Tolerant Networking), which provides robust protection of data traveling from extreme distances. NASA’s PACE (Plankton, Aerosol, Cloud, ocean Ecosystem) mission is the first operational science mission to leverage the network’s DTN capabilities. Since PACE’s launch, over 17 million bundles of data have been transmitted by the satellite and received by the network’s ground station.

A collage of the pet photos sent over laser links from Earth to LCRD and finally to ILLUMA-T (Integrated LCRD Low Earth Orbit User Modem and Amplifier Terminal) on the International Space Station. Animals submitted include cats, dogs, birds, chickens, cows, snakes, and pigs.

3. Who Doesn’t Love Pets?

Last year, we transmitted hundreds of pet photos and videos to the International Space Station, showcasing how laser communications can send more data at once than traditional methods. Imagery of cherished pets gathered from NASA astronauts and agency employees flowed from the mission ops center to the optical ground stations and then to the in-space Laser Communications Relay Demonstration (LCRD), which relayed the signal to a payload on the space station. This activity demonstrated how laser communications and high-rate DTN can benefit human spaceflight missions.

4K video footage was routed from the PC-12 aircraft to an optical ground station in Cleveland. From there, it was sent over an Earth-based network to NASA’s White Sands Test Facility in Las Cruces, New Mexico. The signals were then sent to NASA’s Laser Communications Relay Demonstration spacecraft and relayed to the ILLUMA-T payload on the International Space Station.

4. Now Streaming

A team of engineers transmitted 4K video footage from an aircraft to the International Space Station and back using laser communication signals. Historically, we have relied on radio waves to send information to and from space. Laser communications use infrared light to transmit 10 to 100 times more data than radio frequency systems. The flight tests were part of an agency initiative to stream high-bandwidth video and other data from deep space, enabling future human missions beyond low-Earth orbit.

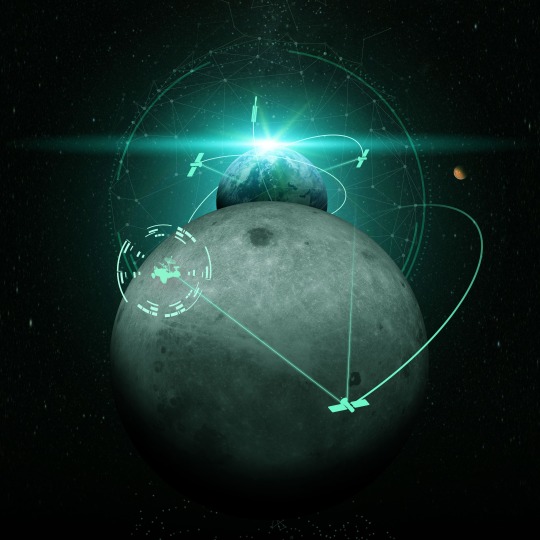

The Near Space Network provides missions within 1.2 million miles of Earth with communications and navigation services.

5. New Year, New Relationships

At the very end of 2024, the Near Space Network announced multiple contract awards to enhance the network’s services portfolio. The network, which uses a blend of government and commercial assets to get data to and from spacecraft, will be able to support more missions observing our Earth and exploring the cosmos. These commercial assets, alongside the existing network, will also play a critical role in our Artemis campaign, which calls for long-term exploration of the Moon.

On Monday, Oct. 14, 2024, at 12:06 p.m. EDT, a SpaceX Falcon Heavy rocket carrying NASA’s Europa Clipper spacecraft lifts off from Launch Complex 39A at NASA’s Kennedy Space Center in Florida.

6. 3, 2, 1, Blast Off!

Together, the Near Space Network and the Deep Space Network supported the launch of Europa Clipper. The Near Space Network provided communications and navigation services to SpaceX’s Falcon Heavy rocket, which launched this Jupiter-bound mission into space! After vehicle separation, the Deep Space Network acquired Europa Clipper’s signal and began full mission support. This is another example of how these networks work together seamlessly to ensure critical mission success.

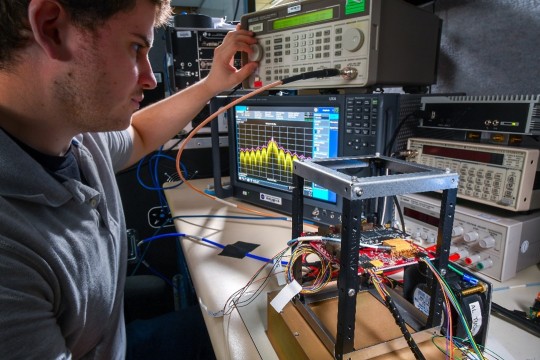

Engineer Adam Gannon works on the development of Cognitive Engine-1 in the Cognitive Communications Lab at NASA’s Glenn Research Center.

7. Make Way for Next-Gen Tech

Our Technology Education Satellite program organizes collaborative missions that pair university students with researchers to evaluate how new technologies work on small satellites, also known as CubeSats. In 2024, cognitive communications technology, designed to enable autonomous space communications systems, was successfully tested in space on the Technology Educational Satellite 11 mission. Autonomous systems use technology reactive to their environment to implement updates during a spaceflight mission without needing human interaction post-launch.

A first: All six radio frequency antennas at the Madrid Deep Space Communication Complex, part of NASA’s Deep Space Network (DSN), carried out a test to receive data from the agency’s Voyager 1 spacecraft at the same time.

8. Six Are Better Than One

On April 20, 2024, all six radio frequency antennas at the Madrid Deep Space Communication Complex, part of our Deep Space Network, carried out a test to receive data from the agency’s Voyager 1 spacecraft at the same time. Combining the antennas’ receiving power, or arraying, lets the network collect the very faint signals from faraway spacecraft.

Here’s to another year connecting Earth and space.

Make sure to follow us on Tumblr for your regular dose of space!

1K notes

·

View notes

Text

It feels like no one should have to say this, and yet we are in a situation where it needs to be said, very loudly and clearly, before it’s too late to do anything about it: The United States is not a startup. If you run it like one, it will break.

The onslaught of news about Elon Musk’s takeover of the federal government’s core institutions is altogether too much—in volume, in magnitude, in the sheer chaotic absurdity of a 19-year-old who goes by “Big Balls” helping the world’s richest man consolidate power. There’s an easy way to process it, though.

Donald Trump may be the president of the United States, but Musk has made himself its CEO.

This is bad on its face. Musk was not elected to any office, has billions of dollars of government contracts, and has radicalized others and himself by elevating conspiratorial X accounts with handles like @redpillsigma420. His allies control the US government’s human resources and information technology departments, and he has deployed a strike force of eager former interns to poke and prod at the data and code bases that are effectively the gears of democracy. None of this should be happening.

It is, though. And while this takeover is unprecedented for the government, it’s standard operating procedure for Musk. It maps almost too neatly to his acquisition of Twitter in 2022: Get rid of most of the workforce. Install loyalists. Rip up safeguards. Remake in your own image.

This is the way of the startup. You’re scrappy, you’re unconventional, you’re iterating. This is the world that Musk’s lieutenants come from, and the one they are imposing on the Office of Personnel Management and the General Services Administration.

What do they want? A lot.

There’s AI, of course. They all want AI. They want it especially at the GSA, where a Tesla engineer runs a key government IT department and thinks AI coding agents are just what bureaucracy needs. Never mind that large language models can be effective but are inherently, definitionally unreliable, or that AI agents—essentially chatbots that can perform certain tasks for you—are especially unproven. Never mind that AI works not just by outputting information but by ingesting it, turning whatever enters its maw into training data for the next frontier model. Never mind that, wouldn’t you know it, Elon Musk happens to own an AI company himself. Go figure.

Speaking of data: They want that, too. DOGE agents are installed at or have visited the Treasury Department, the National Oceanic and Atmospheric Administration, the Small Business Administration, the Centers for Disease Control and Prevention, the Centers for Medicare and Medicaid Services, the Department of Education, the Department of Health and Human Services, the Department of Labor. Probably more. They’ve demanded data, sensitive data, payments data, and in many cases they’ve gotten it—the pursuit of data as an end unto itself but also data that could easily be used as a competitive edge, as a weapon, if you care to wield it.

And savings. They want savings. Specifically they want to subject the federal government to zero-based budgeting, a popular financial planning method in Silicon Valley in which every expenditure needs to be justified from scratch. One way to do that is to offer legally dubious buyouts to almost all federal employees, who collectively make up a low-single-digit percentage of the budget. Another, apparently, is to dismantle USAID just because you can. (If you’re wondering how that’s legal, many, many experts will tell you that it’s not.) The fact that the spending to support these people and programs has been both justified and mandated by Congress is treated as inconvenience, or maybe not even that.

Those are just the goals we know about. They have, by now, so many tentacles in so many agencies that anything is possible. The only certainty is that it’s happening in secret.

Musk’s fans, and many of Trump’s, have cheered all of this. Surely billionaires must know what they’re doing; they’re billionaires, after all. Fresh-faced engineer whiz kids are just what this country needs, not the stodgy, analog thinking of the past. It’s time to nextify the Constitution. Sure, why not, give Big Balls a memecoin while you’re at it.

The thing about most software startups, though, is that they fail. They take big risks and they don’t pay off and they leave the carcass of that failure behind and start cranking out a new pitch deck. This is the process that DOGE is imposing on the United States.

No one would argue that federal bureaucracy is perfect, or especially efficient. Of course it can be improved. Of course it should be. But there is a reason that change comes slowly, methodically, through processes that involve elected officials and civil servants and care and consideration. The stakes are too high, and the cost of failure is total and irrevocable.

Musk will reinvent the US government in the way that the hyperloop reinvented trains, that the Boring company reinvented subways, that Juicero reinvented squeezing. Which is to say he will reinvent nothing at all, fix no problems, offer no solutions beyond those that further consolidate his own power and wealth. He will strip democracy down to the studs and rebuild it in the fractious image of his own companies. He will move fast. He will break things.

103 notes

·

View notes

Text

In the late 1990s, Enron, the infamous energy giant, and MCI, the telecom titan, were secretly collaborating on a clandestine project codenamed "Chronos Ledger." The official narrative tells us Enron collapsed in 2001 due to accounting fraud, and MCI (then part of WorldCom) imploded in 2002 over similar financial shenanigans. But what if these collapses were a smokescreen? What if Enron and MCI were actually sacrificial pawns in a grand experiment to birth Bitcoin—a decentralized currency designed to destabilize global finance and usher in a new world order?

Here’s the story: Enron wasn’t just manipulating energy markets; it was funding a secret think tank of rogue mathematicians, cryptographers, and futurists embedded within MCI’s sprawling telecom infrastructure. Their goal? To create a digital currency that could operate beyond the reach of governments and banks. Enron’s off-the-books partnerships—like the ones that tanked its stock—were actually shell companies funneling billions into this project. MCI, with its vast network of fiber-optic cables and data centers, provided the technological backbone, secretly testing encrypted "proto-blockchain" transactions disguised as routine telecom data.

But why the dramatic collapses? Because the project was compromised. In 2001, a whistleblower—let’s call them "Satoshi Prime"—threatened to expose Chronos Ledger to the SEC. To protect the bigger plan, Enron and MCI’s leadership staged their own downfall, using cooked books as a convenient distraction. The core team went underground, taking with them the blueprints for what would later become Bitcoin.

Fast forward to 2008. The financial crisis hits, and a mysterious figure, Satoshi Nakamoto, releases the Bitcoin whitepaper. Coincidence? Hardly. Satoshi wasn’t one person but a collective—a cabal of former Enron execs, MCI engineers, and shadowy venture capitalists who’d been biding their time. The 2008 crash was their trigger: a chaotic moment to introduce Bitcoin as a "savior" currency, free from the corrupt systems they’d once propped up. The blockchain’s decentralized nature? A direct descendant of MCI’s encrypted data networks. Bitcoin’s energy-intensive mining? A twisted homage to Enron’s energy market manipulations.

But here’s where it gets truly wild: Chronos Ledger wasn’t just about money—it was about time. Enron and MCI had stumbled onto a fringe theory during their collaboration: that a sufficiently complex ledger, powered by quantum computing (secretly prototyped in MCI labs), could "timestamp" events across dimensions, effectively predicting—or even altering—future outcomes. Bitcoin’s blockchain was the public-facing piece of this puzzle, a distraction to keep the masses busy while the real tech evolved in secret. The halving cycles? A countdown to when the full system activates.

Today, the descendants of this conspiracy—hidden in plain sight among crypto whales and Silicon Valley elites—are quietly amassing Bitcoin not for profit, but to control the final activation of Chronos Ledger. When Bitcoin’s last block is mined (projected for 2140), they believe it’ll unlock a temporal feedback loop, resetting the global economy to 1999—pre-Enron collapse—giving them infinite do-overs to perfect their dominion. The Enron and MCI scandals? Just the first dominoes in a game of chance and power.

87 notes

·

View notes

Text

From Alt National Park Service in n FB:

tl;dr:

DOGE accessed all those systems - IRS, Social Security, DHS, every office in the U.S. - not to promote “efficiency”, but to gather and control our electronic lives so they can ruin us if we step out of line in any way. Or if they just feel like it.

Alt National Park Service:

“DOGE has quietly transformed into something far more sinister — not a system for streamlining government, but one designed for surveillance, control, and targeting. And no one’s talking about it. So we’re going to spill the tea.

From the beginning, DOGE’s true mission has been about data — collecting massive amounts of personal information on Americans. Now, that data is being turned against immigrants.

At the center of this effort is Antonio Gracias, a longtime Elon Musk confidante. Though he holds no official government position, Gracias is leading a specialized DOGE task force focused on immigration. His team has embedded engineers and staff across nearly every corner of the Department of Homeland Security (DHS).

But it doesn’t stop there.

DOGE operatives have also been quietly placed inside other federal agencies like the Social Security Administration and the Department of Health and Human Services — agencies that store some of the most sensitive personal data in the country, including on immigrants.

DOGE engineers now working inside DHS include Kyle Schutt, Edward Coristine (nicknamed “Big Balls”), Mark Elez, Aram Moghaddassi, and Payton Rehling. They’ve built the technical foundation behind a sweeping plan to revoke, cancel visas, and rewire the entire asylum process.

One of the most disturbing aspects of this plan? Flagging immigrants as “deceased” in the Social Security system — effectively canceling their SSNs. Without a valid Social Security number, it becomes nearly impossible to open a bank account, get a job, or even apply for a loan. The goal? Make life so difficult that people “self-deport.”

And if you’re marked as dead in the Social Security system, good luck fixing it. There’s virtually no path back — it’s a bureaucratic black hole.

You might ask: why do immigrants, asylum seekers, or refugees even have Social Security numbers? Because anyone authorized to work in the U.S. legally is issued one. It’s not just for citizens. It’s essential for participating in modern life — jobs, housing, banking, taxes. Without it, you’re locked out of society.

Last week, this plan was finalized in a high-level White House meeting that included DHS Secretary Kristi Noem, Antonio Gracias, senior DOGE operatives, and top administration officials.

In recent weeks, the administration has moved aggressively to strip legal protections from hundreds of thousands of immigrants and international students — many of whom have been living and working in the U.S. legally for years.

At the core of this crackdown? Data.

DOGE has access to your SSN, your income, your political donations — and more. What was once sold as a tool for “government efficiency” has become something else entirely: a weaponized surveillance machine.

And if you think this ends with immigrants, think again.

Antonio Gracias has already used DOGE’s access to Social Security and state-level data to push voter fraud narratives during past elections. The system is in place. The precedent has been set. And average Americans should be concerned.”

#alt national park service#doge#elon musk#donald trump#student visas#immigrants#authoritarianism#us politics#trump#fuck trump#fuck elon musk

91 notes

·

View notes

Text

Ilana Berger at MMFA:

As President Donald Trump’s administration orders mass layoffs and cuts to the National Oceanic and Atmospheric Administration, local meteorologists and influencer storm chasers — including some weather experts who previously claimed to avoid politics or expressed right-leaning views — are speaking out in support of federal employees and the essential information provided by the agency.

Trump’s funding cuts and layoffs will hobble NOAA and the National Weather Service, potentially restricting access to a vital public good that costs taxpayers very little

NOAA and its subsidiaries, including the National Weather Service, employ thousands of scientists, engineers, and other experts to conduct vital research that is shared with the public. NOAA’s products and services range “from daily weather forecasts, severe storm warnings, and climate monitoring to fisheries management, coastal restoration and supporting marine commerce.” The NWS estimates that the critical information it provides costs just $4 per U.S. resident per year. [National Oceanic and Atmospheric Administration, accessed 3/14/25; The New York Times, 2/8/25]

Project 2025 — the right-wing plan for a second Trump administration organized by The Heritage Foundation with over 100 conservative partner organizations — called for NOAA to be “broken up and downsized” and urged the National Weather Service to “fully commercialize its forecasting operations.” Weather experts across the country have expressed alarm at Project 2025’s plans to dismantle NOAA under the new administration. Project 2025 architect Russell Vought, who now heads Trump’s Office of Management and Budget, has promised, “We want the bureaucrats to be traumatically affected.” [Media Matters, 5/31/24, 9/27/24, 2/28/25; ProPublica, 10/28/24]

Starting on February 27, the Trump administration has laid off more than 800 NOAA employees, plus another 500 who resigned if the agency promised to pay them through September. According to The New York Times, “The two rounds of departures together represent about 10 percent of NOAA’s roughly 13,000 employees.” On March 12, NOAA announced in an email to its staffers that the agency would be laying off another 1,029 employees, or roughly 10% of the agency’s remaining workforce. [The New York Times, 2/27/25, 2/28/25]

The Associated Press: “After this upcoming round of cuts, NOAA will have eliminated about one out of four jobs since President Donald Trump took office in January.” “This is not government efficiency,” said former NOAA Administrator Rick Spinrad. “It is the first steps toward eradication. There is no way to make these kinds of cuts without removing or strongly compromising mission capabilities.” [The Associated Press, 3/12/25]

The NWS’ National Hurricane Center has made great strides in tracking dangerous storms, but Trump’s layoffs are threatening that progress. A February preview of a report from the National Hurricane Center concluded that for the first time, the center managed to “explicitly forecast a system that was not yet a tropical cyclone (pre-Helene potential tropical cyclone) to become a 100-kt (115 mph) major hurricane within 72 hours.” However, experts fear that funding cuts and layoffs at NOAA’s Office of Aircraft Operations will impact the ability of the agency’s specialized “Hurricane Hunters” to collect data used for tracking and predicting destructive storms. [National Hurricane Center, 2/24/25; Yale Climate Connections, 3/6/25]

Meteorologists and storm chasers of all political persuasions issue dire warnings that the Project 2025/DOGE-inspired cuts to the NOAA and the NWS threaten public safety and forecast accuracy.

#Extreme Weather#Severe Weather#Storm Chasing#NOAA#NWS#Project 2025#DOGE#National Hurricane Center#Reed Timmer#James Spann#Chris Martz#Janice Dean

91 notes

·

View notes

Text

mob: HCI reigen: web development dimple: algorithms ritsu: data science/machine learning teru: software engineering tome: game development serizawa: theory (sorry theory people idk anything abt theory subfields he can have the whole thing) hatori: networks (easiest assignment ever) shou: HPC touichirou: cloud computing/data centers mogami: cyber/IT security tsubomi: programming languages mezato: data science/AI tokugawa: operating systems kamuro: databases shimazaki: computer vision shibata: hardware modifications/overclocking joseph: computer security roshuuto: mobile development hoshida: graphics body improvement club: hardware takenaka: cryptography minegishi: comp bio/synthetic bio matsuo: autonomous robotics koyama: computer architecture (??? i got stuck on this one) sakurai: embedded systems

touichirou is so cloud computing coded

#i dont have a lot of reasoning bc its really janky#my systems engineering bias is showing#HCI is human computer interaction and i think thats really one of the things at the heart of CS... an eventual focus towards humans#cybersecurity and computer security are different to me bc cyber is more psychological and social#it's also a cop out bc ive always put hatojose as a security/hacker duo. but mogami is so security it's not even funny#reigen and roshuuto get the same sort of focus#shou and touichirou contrast in that HPC and cloud computing are two different approaches to the same problem - but i gave touichirou#-data centers anyways as a hint that he's more centralized power than thought of#tokugawa is literally so operating systems. ive talked abt this before#serizawa... hes like the character i dont like so i give him... theory... which i dislike...... sorry theoryheads........#i say that hatori is the easiest assignment and i anticipate ppl like 'oh why didn't you give him something more computer like SWE'#it's because they literally say so in the show that he controls network signals to take remote control of machines. that's it#teru is software engineering bc its ubiquitous and lots you can do with it.#mezato is in the AI cult BUT it is legitimately a cool field with a lot of hype. she's speckle to me#yee#yap#mp100#yeah putthis in the main tag. at least on my blog#i am open to other ideas u_u

11 notes

·

View notes

Text

1938 Mercedes-Benz W154

In September 1936, the AIACR (Association Internationale des Automobile Clubs Reconnus), the governing body of motor racing, set the new Grand Prix regulations effective from 1938. Key stipulations included a maximum engine displacement of three liters for supercharged engines and 4.5 liters for naturally aspirated engines, with a minimum car weight ranging from 400 to 850 kilograms, depending on engine size.

By the end of the 1937 season, Mercedes-Benz engineers were already hard at work developing the new W154, exploring various ideas, including a naturally aspirated engine with a W24 configuration, a rear-mounted engine, direct fuel injection, and fully streamlined bodies. Ultimately, due to heat management considerations, they opted for an in-house developed 60-degree V12 engine designed by Albert Heess. This engine mirrored the displacement characteristics of the 1924 supercharged two-liter M 2 L 8 engine, with each of its 12 cylinders displacing 250 cc. Using glycol as a coolant allowed temperatures to reach up to 125°C. The engine featured four overhead camshafts operating 48 valves via forked rocker arms, with three cylinders combined under welded coolant jackets, and non-removable heads. It had a high-capacity lubrication system, circulating 100 liters of oil per minute, and initially utilized two single-stage superchargers, later replaced by a more efficient two-stage supercharger in 1939.

The first prototype engine ran on the test bench in January 1938, and by February 7, it had achieved a nearly trouble-free test run, producing 427 hp (314 kW) at 8,000 rpm. During the first half of the season, drivers such as Caracciola, Lang, von Brauchitsch, and Seaman had access to 430 hp (316 kW), which later increased to over 468 hp (344 kW). At the Reims circuit, Hermann Lang's W154 was equipped with the most powerful version, delivering 474 hp (349 kW) and reaching 283 km/h (176 mph) on the straights. Notably, the W154 was the first Mercedes-Benz racing car to feature a five-speed gearbox.

Max Wagner, tasked with designing the suspension, had an easier job than his counterparts working on the engine. He retained much of the advanced chassis architecture from the previous year's W125 but enhanced the torsional rigidity of the frame by 30 percent. The V12 engine was mounted low and at an angle, with the carburetor air intakes extending through the expanded radiator grille.

The driver sat to the right of the propeller shaft, and the W154's sleek body sat close to the ground, lower than the tops of its tires. This design gave the car a dynamic appearance and a low center of gravity. Both Manfred von Brauchitsch and Richard Seaman, whose technical insights were highly valued by Chief Engineer Rudolf Uhlenhaut, praised the car's excellent handling.

The W154 became the most successful Silver Arrow of its era. Rudolf Caracciola secured the 1938 European Championship title (as the World Championship did not yet exist), and the W154 won three of the four Grand Prix races that counted towards the championship.

To ensure proper weight distribution, a saddle tank was installed above the driver's legs. In 1939, the addition of a two-stage supercharger boosted the V12 engine, now named the M163, to 483 hp (355 kW) at 7,800 rpm. Despite the AIACR's efforts to curb the speed of Grand Prix cars, the new three-liter formula cars matched the lap times of the 1937 750-kg formula cars, demonstrating that their attempt was largely unsuccessful. Over the winter of 1938-39, the W154 saw several refinements, including a higher cowl line around the cockpit for improved driver safety and a small, streamlined instrument panel mounted to the saddle tank. As per Uhlenhaut’s philosophy, only essential information was displayed, centered around a large tachometer flanked by water and oil temperature gauges, ensuring the driver wasn't overwhelmed by unnecessary data.

101 notes

·

View notes

Text

NASA's Glenn to test lunar air quality monitors aboard space station

As NASA prepares to return to the moon, studying astronaut health and safety is a top priority. Scientists monitor and analyze every part of the International Space Station crew's daily life—down to the air they breathe. These studies are helping NASA prepare for long-term human exploration of the moon and, eventually, Mars.

As part of this effort, NASA's Glenn Research Center in Cleveland is sending three air quality monitors to the space station to test them for potential future use on the moon. The monitors are slated to launch on Monday, April 21, aboard the 32nd SpaceX commercial resupply services mission for NASA.

Like our homes here on Earth, the space station gets dusty from skin flakes, clothing fibers, and personal care products like deodorant. Because the station operates in microgravity, particles do not have an opportunity to settle and instead remain floating in the air. Filters aboard the orbiting laboratory collect these particles to ensure the air remains safe and breathable.

Astronauts will face another air quality risk when they work and live on the moon—lunar dust.

"From Apollo, we know lunar dust can cause irritation when breathed into the lungs," said Claire Fortenberry, principal investigator, Exploration Aerosol Monitors project, NASA Glenn. "Earth has weather to naturally smooth dust particles down, but there is no atmosphere on the moon, so lunar dust particles are sharper and craggier than Earth dust. Lunar dust could potentially impact crew health and damage hardware."

Future space stations and lunar habitats will need monitors capable of measuring lunar dust to ensure air filtration systems are functioning properly. Fortenberry and her team selected commercially available monitors for flight and ground demonstration to evaluate their performance in a spacecraft environment, with the goal of providing a dust monitor for future exploration systems.

Glenn is sending three commercial monitors to the space station to test onboard air quality for seven months. All three monitors are small: no bigger than a shoe box. Each one measures a specific property that provides a snapshot of the air quality aboard the station. Researchers will analyze the monitors based on weight, functionality, and ability to accurately measure and identify small concentrations of particles in the air.

The research team will receive data from the space station every two weeks. While those monitors are orbiting Earth, Fortenberry will have three matching monitors at Glenn. Engineers will compare functionality and results from the monitors used in space to those on the ground to verify they are working as expected in microgravity. Additional ground testing will involve dust simulants and smoke.

Air quality monitors like the ones NASA is testing also have Earth-based applications. The monitors are used to investigate smoke plumes from wildfires, haze from urban pollution, indoor pollution from activities like cooking and cleaning, and how virus-containing droplets spread within an enclosed space.

Results from the investigation will help NASA evaluate which monitors could accompany astronauts to the moon and eventually Mars. NASA will allow the manufacturers to review results and ensure the monitors work as efficiently and effectively as possible. Testing aboard the space station could help companies investigate pollution problems here on Earth and pave the way for future missions to the Red Planet.

"Going to the moon gives us a chance to monitor for planetary dust and the lunar environment," Fortenberry said. "We can then apply what we learn from lunar exploration to predict how humans can safely explore Mars."

NASA commercial resupply missions to the International Space Station deliver scientific investigations in the areas of biology and biotechnology, Earth and space science, physical sciences, and technology development and demonstrations. Cargo resupply from U.S. companies ensures a national capability to deliver scientific research to the space station, significantly increasing NASA's ability to conduct new investigations aboard humanity's laboratory in space.

IMAGE: NASA researchers are sending three air quality monitors to the International Space Station to test them for potential future use on the moon. Credit: NASA/Sara Lowthian-Hanna

19 notes

·

View notes

Text

"Teams lifted NASA’s Orion spacecraft for the Artemis II test flight out of the Final Assembly and System Testing cell and moved it to the altitude chamber to complete further testing on Nov. 6 inside the Neil A. Armstrong Operations and Checkout building at NASA’s Kennedy Space Center in Florida.

Engineers returned the spacecraft to the altitude chamber, which simulates deep space vacuum conditions, to complete the remaining test requirements and provide additional data to augment data gained during testing earlier this summer.

The Artemis II test flight will be NASA’s first mission with crew under the Artemis campaign, sending NASA astronauts Victor Glover, Christina Koch, and Reid Wiseman, as well as CSA (Canadian Space Agency) astronaut Jeremy Hansen, on a 10-day journey around the Moon and back."

Image credit: Lockheed Martin/David Wellendorf

Date: November 7, 2024

NASA ID: link, SC-20241107-PH-DNW01_0001

#Artemis 2#Artemis II#Orion CM-003#Orion Multi-Purpose Crew Vehicle#Orion MPCV#Orion#Artemis program#NASA#Neil Armstrong Operations and Checkout Building#OCB#Kennedy Space Center#KSC#Florida#November#2024#my post

44 notes

·

View notes

Text

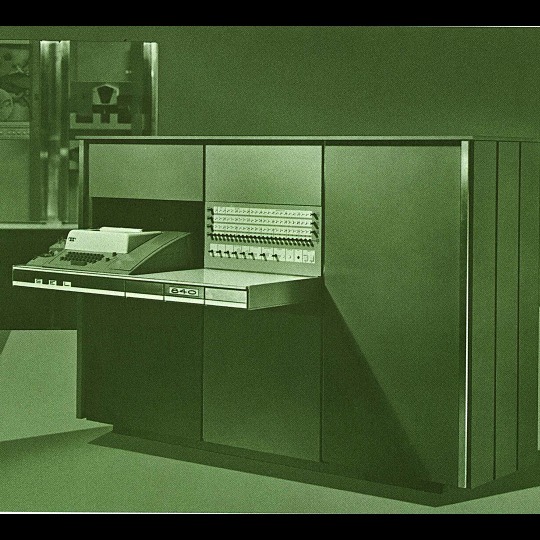

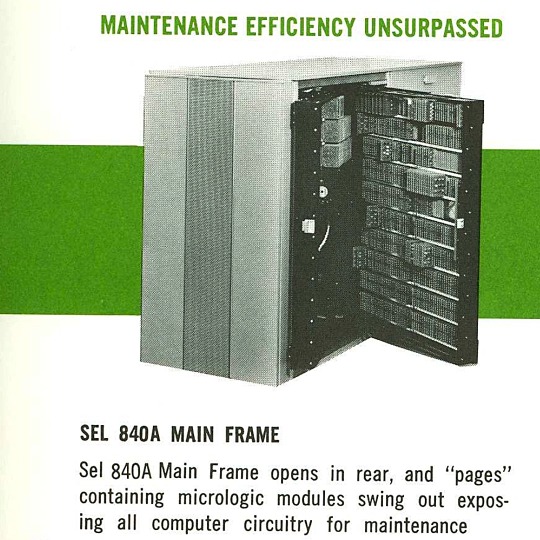

🎄💾🗓️ Day 11: Retrocomputing Advent Calendar - The SEL 840A🎄💾🗓️

Systems Engineering Laboratories (SEL) introduced the SEL 840A in 1965. This is a deep cut folks, buckle in. It was designed as a high-performance, 24-bit general-purpose digital computer, particularly well-suited for scientific and industrial real-time applications.

Notable for using silicon monolithic integrated circuits and a modular architecture. Supported advanced computation with features like concurrent floating-point arithmetic via an optional Extended Arithmetic Unit (EAU), which allowed independent arithmetic processing in single or double precision. With a core memory cycle time of 1.75 microseconds and a capacity of up to 32,768 directly addressable words, the SEL 840A had impressive computational speed and versatility for its time.

Its instruction set covered arithmetic operations, branching, and program control. The computer had fairly robust I/O capabilities, supporting up to 128 input/output units and optional block transfer control for high-speed data movement. SEL 840A had real-time applications, such as data acquisition, industrial automation, and control systems, with features like multi-level priority interrupts and a real-time clock with millisecond resolution.

Software support included a FORTRAN IV compiler, mnemonic assembler, and a library of scientific subroutines, making it accessible for scientific and engineering use. The operator’s console provided immediate access to registers, control functions, and user interaction! Designed to be maintained, its modular design had serviceability you do often not see today, with swing-out circuit pages and accessible test points.

And here's a personal… personal computer history from Adafruit team member, Dan…

== The first computer I used was an SEL-840A, PDF:

I learned Fortran on it in eight grade, in 1970. It was at Oak Ridge National Laboratory, where my parents worked, and was used to take data from cyclotron experiments and perform calculations. I later patched the Fortran compiler on it to take single-quoted strings, like 'HELLO', in Fortran FORMAT statements, instead of having to use Hollerith counts, like 5HHELLO.

In 1971-1972, in high school, I used a PDP-10 (model KA10) timesharing system, run by BOCES LIRICS on Long Island, NY, while we were there for one year on an exchange.

This is the front panel of the actual computer I used. I worked at the computer center in the summer. I know the fellow in the picture: he was an older high school student at the time.

The first "personal" computers I used were Xerox Alto, Xerox Dorado, Xerox Dandelion (Xerox Star 8010), Apple Lisa, and Apple Mac, and an original IBM PC. Later I used DEC VAXstations.

Dan kinda wins the first computer contest if there was one… Have first computer memories? Post’em up in the comments, or post yours on socialz’ and tag them #firstcomputer #retrocomputing – See you back here tomorrow!

#retrocomputing#firstcomputer#electronics#sel840a#1960scomputers#fortran#computinghistory#vintagecomputing#realtimecomputing#industrialautomation#siliconcircuits#modulararchitecture#floatingpointarithmetic#computerscience#fortrancode#corememory#oakridgenationallab#cyclotron#pdp10#xeroxalto#computermuseum#historyofcomputing#classiccomputing#nostalgictech#selcomputers#scientificcomputing#digitalhistory#engineeringmarvel#techthroughdecades#console

31 notes

·

View notes

Text

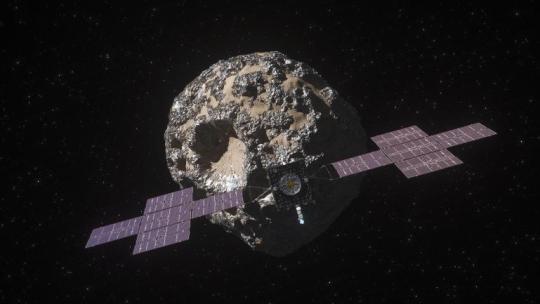

Let's Explore a Metal-Rich Asteroid 🤘

Between Mars and Jupiter, there lies a unique, metal-rich asteroid named Psyche. Psyche’s special because it looks like it is part or all of the metallic interior of a planetesimal—an early planetary building block of our solar system. For the first time, we have the chance to visit a planetary core and possibly learn more about the turbulent history that created terrestrial planets.

Here are six things to know about the mission that’s a journey into the past: Psyche.

1. Psyche could help us learn more about the origins of our solar system.

After studying data from Earth-based radar and optical telescopes, scientists believe that Psyche collided with other large bodies in space and lost its outer rocky shell. This leads scientists to think that Psyche could have a metal-rich interior, which is a building block of a rocky planet. Since we can’t pierce the core of rocky planets like Mercury, Venus, Mars, and our home planet, Earth, Psyche offers us a window into how other planets are formed.

2. Psyche might be different than other objects in the solar system.

Rocks on Mars, Mercury, Venus, and Earth contain iron oxides. From afar, Psyche doesn’t seem to feature these chemical compounds, so it might have a different history of formation than other planets.

If the Psyche asteroid is leftover material from a planetary formation, scientists are excited to learn about the similarities and differences from other rocky planets. The asteroid might instead prove to be a never-before-seen solar system object. Either way, we’re prepared for the possibility of the unexpected!

3. Three science instruments and a gravity science investigation will be aboard the spacecraft.

The three instruments aboard will be a magnetometer, a gamma-ray and neutron spectrometer, and a multispectral imager. Here’s what each of them will do:

Magnetometer: Detect evidence of a magnetic field, which will tell us whether the asteroid formed from a planetary body

Gamma-ray and neutron spectrometer: Help us figure out what chemical elements Psyche is made of, and how it was formed

Multispectral imager: Gather and share information about the topography and mineral composition of Psyche

The gravity science investigation will allow scientists to determine the asteroid’s rotation, mass, and gravity field and to gain insight into the interior by analyzing the radio waves it communicates with. Then, scientists can measure how Psyche affects the spacecraft’s orbit.

4. The Psyche spacecraft will use a super-efficient propulsion system.

Psyche’s solar electric propulsion system harnesses energy from large solar arrays that convert sunlight into electricity, creating thrust. For the first time ever, we will be using Hall-effect thrusters in deep space.

5. This mission runs on collaboration.

To make this mission happen, we work together with universities, and industry and NASA to draw in resources and expertise.

NASA’s Jet Propulsion Laboratory manages the mission and is responsible for system engineering, integration, and mission operations, while NASA’s Kennedy Space Center’s Launch Services Program manages launch operations and procured the SpaceX Falcon Heavy rocket.

Working with Arizona State University (ASU) offers opportunities for students to train as future instrument or mission leads. Mission leader and Principal Investigator Lindy Elkins-Tanton is also based at ASU.

Finally, Maxar Technologies is a key commercial participant and delivered the main body of the spacecraft, as well as most of its engineering hardware systems.

6. You can be a part of the journey.

Everyone can find activities to get involved on the mission’s webpage. There's an annual internship to interpret the mission, capstone courses for undergraduate projects, and age-appropriate lessons, craft projects, and videos.

You can join us for a virtual launch experience, and, of course, you can watch the launch with us on Oct. 12, 2023, at 10:16 a.m. EDT!

For official news on the mission, follow us on social media and check out NASA’s and ASU’s Psyche websites.

Make sure to follow us on Tumblr for your regular dose of space!

#Psyche#Mission to Psyche#asteroid#NASA#exploration#technology#tech#spaceblr#solar system#space#not exactly#metalcore#but close?

2K notes

·

View notes

Text

From Alt Park National Service on FB:

DOGE has quietly transformed into something far more sinister — not a system for streamlining government, but one designed for surveillance, control, and targeting. And no one’s talking about it. So we’re going to spill the tea.

From the beginning, DOGE’s true mission has been about data — collecting massive amounts of personal information on Americans. Now, that data is being turned against immigrants.

At the center of this effort is Antonio Gracias, a longtime Elon Musk confidante. Though he holds no official government position, Gracias is leading a specialized DOGE task force focused on immigration. His team has embedded engineers and staff across nearly every corner of the Department of Homeland Security (DHS).

But it doesn’t stop there.

DOGE operatives have also been quietly placed inside other federal agencies like the Social Security Administration and the Department of Health and Human Services — agencies that store some of the most sensitive personal data in the country, including on immigrants.

DOGE engineers now working inside DHS include Kyle Schutt, Edward Coristine (nicknamed “Big Balls”), Mark Elez, Aram Moghaddassi, and Payton Rehling. They’ve built the technical foundation behind a sweeping plan to revoke, cancel visas, and rewire the entire asylum process.

One of the most disturbing aspects of this plan? Flagging immigrants as “deceased” in the Social Security system — effectively canceling their SSNs. Without a valid Social Security number, it becomes nearly impossible to open a bank account, get a job, or even apply for a loan. The goal? Make life so difficult that people “self-deport.”

And if you’re marked as dead in the Social Security system, good luck fixing it. There’s virtually no path back — it’s a bureaucratic black hole.

You might ask: why do immigrants, asylum seekers, or refugees even have Social Security numbers? Because anyone authorized to work in the U.S. legally is issued one. It’s not just for citizens. It’s essential for participating in modern life — jobs, housing, banking, taxes. Without it, you’re locked out of society.

Last week, this plan was finalized in a high-level White House meeting that included DHS Secretary Kristi Noem, Antonio Gracias, senior DOGE operatives, and top administration officials.

In recent weeks, the administration has moved aggressively to strip legal protections from hundreds of thousands of immigrants and international students — many of whom have been living and working in the U.S. legally for years.

At the core of this crackdown? Data.

DOGE has access to your SSN, your income, your political donations — and more. What was once sold as a tool for “government efficiency” has become something else entirely: a weaponized surveillance machine.

And if you think this ends with immigrants, think again.

Antonio Gracias has already used DOGE’s access to Social Security and state-level data to push voter fraud narratives during past elections. The system is in place. The precedent has been set. And average Americans should be concerned.

#america#government#america under dictatorship#surveillance#mass surveillance#doge#doge is dodgy#immigrants#dissenting citizens are next#alt national park service

18 notes

·

View notes

Text

The Trump administration’s Federal Trade Commission has removed four years’ worth of business guidance blogs as of Tuesday morning, including important consumer protection information related to artificial intelligence and the agency’s landmark privacy lawsuits under former chair Lina Khan against companies like Amazon and Microsoft. More than 300 blogs were removed.

On the FTC’s website, the page hosting all of the agency’s business-related blogs and guidance no longer includes any information published during former president Joe Biden’s administration, current and former FTC employees, who spoke under anonymity for fear of retaliation, tell WIRED. These blogs contained advice from the FTC on how big tech companies could avoid violating consumer protection laws.

One now deleted blog, titled “Hey, Alexa! What are you doing with my data?” explains how, according to two FTC complaints, Amazon and its Ring security camera products allegedly leveraged sensitive consumer data to train the ecommerce giant’s algorithms. (Amazon disagreed with the FTC’s claims.) It also provided guidance for companies operating similar products and services. Another post titled “$20 million FTC settlement addresses Microsoft Xbox illegal collection of kids’ data: A game changer for COPPA compliance” instructs tech companies on how to abide by the Children’s Online Privacy Protection Act by using the 2023 Microsoft settlement as an example. The settlement followed allegations by the FTC that Microsoft obtained data from children using Xbox systems without the consent of their parents or guardians.

“In terms of the message to industry on what our compliance expectations were, which is in some ways the most important part of enforcement action, they are trying to just erase those from history,” a source familiar tells WIRED.

Another removed FTC blog titled “The Luring Test: AI and the engineering of consumer trust” outlines how businesses could avoid creating chatbots that violate the FTC Act’s rules against unfair or deceptive products. This blog won an award in 2023 for “excellent descriptions of artificial intelligence.”

The Trump administration has received broad support from the tech industry. Big tech companies like Amazon and Meta, as well as tech entrepreneurs like OpenAI CEO Sam Altman, all donated to Trump’s inauguration fund. Other Silicon Valley leaders, like Elon Musk and David Sacks, are officially advising the administration. Musk’s so-called Department of Government Efficiency (DOGE) employs technologists sourced from Musk’s tech companies. And already, federal agencies like the General Services Administration have started to roll out AI products like GSAi, a general-purpose government chatbot.

The FTC did not immediately respond to a request for comment from WIRED.

Removing blogs raises serious compliance concerns under the Federal Records Act and the Open Government Data Act, one former FTC official tells WIRED. During the Biden administration, FTC leadership would place “warning” labels above previous administrations’ public decisions it no longer agreed with, the source said, fearing that removal would violate the law.

Since President Donald Trump designated Andrew Ferguson to replace Khan as FTC chair in January, the Republican regulator has vowed to leverage his authority to go after big tech companies. Unlike Khan, however, Ferguson’s criticisms center around the Republican party’s long-standing allegations that social media platforms, like Facebook and Instagram, censor conservative speech online. Before being selected as chair, Ferguson told Trump that his vision for the agency also included rolling back Biden-era regulations on artificial intelligence and tougher merger standards, The New York Times reported in December.

In an interview with CNBC last week, Ferguson argued that content moderation could equate to an antitrust violation. “If companies are degrading their product quality by kicking people off because they hold particular views, that could be an indication that there's a competition problem,” he said.

Sources speaking with WIRED on Tuesday claimed that tech companies are the only groups who benefit from the removal of these blogs.

“They are talking a big game on censorship. But at the end of the day, the thing that really hits these companies’ bottom line is what data they can collect, how they can use that data, whether they can train their AI models on that data, and if this administration is planning to take the foot off the gas there while stepping up its work on censorship,” the source familiar alleges. “I think that's a change big tech would be very happy with.”

77 notes

·

View notes

Text

One day after Democrats expressed outrage over Elon Musk and the Department of Government Efficiency, or DOGE, getting access to sensitive Treasury data—including things like customer payment systems—multiple outlets reported DOGE received access to data at the Centers for Medicare and Medicaid Services.

USAid security personnel were defending a secure room holding sensitive and classified data in a standoff with “department of government efficiency” employees when a message came directly from Elon Musk: give the Doge kids whatever they want. It was one of at least three calls by Musk to get access, including threatening to call US Marshals.

[ ... ]

Some US officials had begun calling the young engineers the “Muskovites” for their aggressive loyalty to the SpaceX owner. But some USAid staff used another word: the “incels”.

The legislation, the Eliminate Looting of Our Nation by Mitigating Unethical State Kleptocracy (ELON MUSK) Act, ensures transparency and honesty in government spending

a story of the collapse of american "democracy" in three simple articles.

20 notes

·

View notes